via Better Humans

(click image to enlarge)

Our brains are incredible things.

Every minute of every day our minds absorb tremendous amounts of new information.

Some of this information we consciously think about, question, work on, mull over, and attempt to solve.

However, the conscious part of our brain can only focus on one thing at a time. To make matters more complicated, we often have to think and act quickly.

Our brains will often use shortcuts to help us out. These shortcuts are called heuristics.

These mental shortcuts are incredibly useful and they’re often very accurate. That’s why our brains evolved to use them in the first place.

Unfortunately for us, heuristics aren’t infallible. Sometimes things aren’t exactly as they appear on the surface (for example, a common situation has been slightly changed or is unique). In these instances, relying on heuristics can seriously hurt us and cause us to make bad decisions.

When our heuristics fail to produce a correct judgment, the result is a cognitive bias, which is the tendency to draw an incorrect conclusion in a certain circumstance based on cognitive factors.

Cognitive biases can affect us in all aspects of life, from shopping to relationships, from jury verdicts to job interviews. Cognitive biases are especially important for investors, whose main goal should be to think as rationally and logically as possible in order to find the true value of a business.

Therefore, an awareness of the heuristics your brain uses and the cognitive biases they can cause is imperative if you want to be a successful investor.

As the father of value investing Benjamin Graham noted in The Intelligent Investor:

If you’ve read Thinking, Fast and Slow by Daniel Kahneman (a book I highly recommend), then you probably already know some of the most important heuristics and cognitive biases that affect us nearly every day – and, importantly, that affect investors when we make capital allocation decisions.

However, there are dozens and dozens of different heuristics and cognitive biases that our brains can use. Wikipedia’s list of cognitive biases lists 175 different ones. That’s a lot of biases to be aware of.

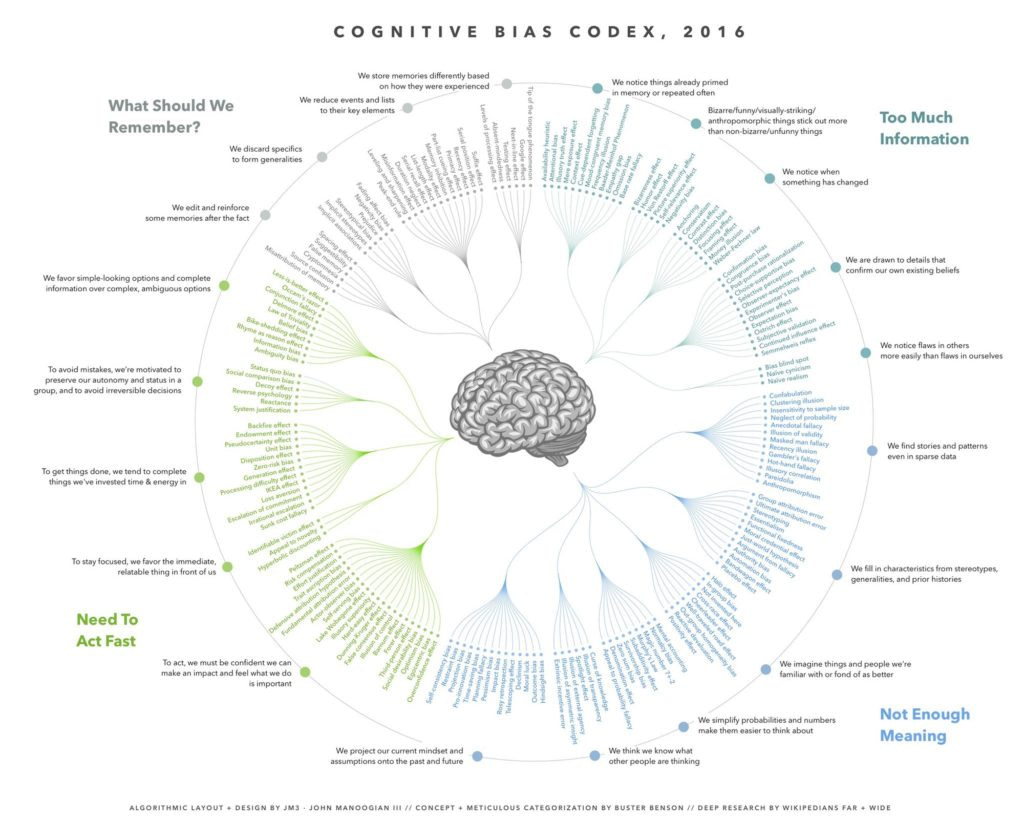

Luckily, the list of cognitive biases becomes much easier to deal with if we can condense them into just several broad groups. Buster Benson over at Better Humans came up with four categories for the 175 possible heuristics and cognitive biases based on the problems that they help our brains solve.

The 4 Problems Heuristics Help us Solve

As Buster points out, every heuristic exists for a reason, primarily to save our brains time or energy. When we look at them based on the main problems that they’re trying to solve, it becomes a lot easier to understand why they exist, how they’re useful, and what mental errors (cognitive biases) they can introduce.

The four main problems heuristics help us solve are:

- Information overload

- Lack of meaning

- The need to act fast

- How to know what needs to be remembered for later

Problem 1: Too Much Information

Because there is just too much information in the world, our brains have no choice but to filter most of it out. Our brains use a few simple tricks to pick out the bits of information that are most likely going to be useful to us in some way.

- We notice things that are already primed in memory or repeated often.

See: Availability heuristic, Attentional bias, Illusory truth effect, Mere exposure effect, Context effect, Cue-dependent forgetting, Mood-congruent memory bias, Frequency illusion, Baader-Meinhof Phenomenon, Empathy gap, Omission bias, Base rate fallacy. - Bizarre, funny, surprising, and unique things stick out more than common, boring, expected things.

See: Bizarreness effect, Humor effect, Von Restorff effect, Picture superiority effect, Self-relevance effect, Negativity bias. - We use comparisons to evaluate similar things and we notice when something has changed (and we often make decisions based on the direction or magnitude of change, rather than re-evaluating the new value as if it were presented alone).

See: Anchoring, Contrast effect, Focusing effect, Money illusion, Framing effect, Weber–Fechner law, Conservatism, Distinction bias. - We are drawn to details that confirm our own existing beliefs and ignore details that contradict our existing beliefs.

See: Confirmation bias, Congruence bias, Post-purchase rationalization, Choice-supportive bias, Selective perception, Observer-expectancy effect, Experimenter’s bias, Observer effect, Expectation bias, Ostrich effect, Subjective validation, Continued influence effect, Semmelweis reflex. - We notice flaws in others more easily than flaws in ourselves.

See: Bias blind spot, Naïve cynicism, Naïve realism.

Problem 2: Not Enough Meaning

The world is very confusing. Even once we reduce the amount of information we process through the heuristics and cognitive biases above, we still can’t always understand it easily. So, our minds connect the dots and fill in the gaps with what we think we already know.

- We find stories and patterns even in sparse data.

See: Confabulation, Clustering illusion, Insensitivity to sample size, Neglect of probability, Anecdotal fallacy, Illusion of validity, Masked man fallacy, Recency illusion, Gambler’s fallacy, Hot-hand fallacy, Illusory correlation, Pareidolia, Anthropomorphism. - We fill in characteristics from stereotypes, generalities, and prior histories whenever there are new specific instances or gaps in information.

See: Group attribution error, Ultimate attribution error, Stereotyping, Essentialism, Functional fixedness, Moral credential effect, Just-world hypothesis, Argument from fallacy, Authority bias, Automation bias, Bandwagon effect, Placebo effect. - We imagine things we’re familiar with or personally like as better than things we aren’t familiar with or don’t like.

See: Halo effect, In-group bias, Out-group homogeneity bias, Cross-race effect, Cheerleader effect, Well-traveled road effect, Not invented here, Reactive devaluation, Positivity effect. - We simplify probabilities and numbers to make them easier to think about.

See: Mental accounting, Normalcy bias, Appeal to probability fallacy, Murphy’s Law, Subadditivity effect, Survivorship bias, Zero sum bias, Denomination effect, Magic number 7+-2. - We think we know what others are thinking based on what we’re thinking or based on a simplified version of reality.

See: Curse of knowledge, Illusion of transparency, Spotlight effect, Illusion of external agency, Illusion of asymmetric insight, Extrinsic incentive error. - We project our current mindset and assumptions onto the past and future.

See: Hindsight bias, Outcome bias, Moral luck, Declinism, Telescoping effect, Rosy retrospection, Impact bias, Pessimism bias, Planning fallacy, Time-saving bias, Pro-innovation bias, Projection bias, Restraint bias, Self-consistency bias.

Problem 3: The Need to Act Fast

After we reduce the stream of information we process and then make assumptions about the data, we then have to work quickly to act on it.

- In order to help us actually take action, we first need to feel confident in our ability to make an impact and to feel like what we’re doing is important.

See: Overconfidence effect, Egocentric bias, Optimism bias, Social desirability bias, Third-person effect, Forer effect, Barnum effect, Illusion of control, False consensus effect, Dunning-Kruger effect, Hard-easy effect, Illusory superiority, Lake Wobegone effect, Self-serving bias, Actor-observer bias, Fundamental attribution error, Defensive attribution hypothesis, Trait ascription bias, Effort justification, Risk compensation, Peltzman effect. - In order to stay focused, we favor the immediate, relatable thing in front of us over the delayed and distant.

See: Hyperbolic discounting, Appeal to novelty, Identifiable victim effect. - In order to get anything done, we’re motivated to complete things that we’ve already invested time and energy in.

See: Sunk cost fallacy, Irrational escalation, Escalation of commitment, Loss aversion, IKEA effect, Processing difficulty effect, Generation effect, Zero-risk bias, Disposition effect, Unit bias, Pseudocertainty effect, Endowment effect, Backfire effect. - In order to avoid mistakes, we’re motivated to preserve our autonomy and status in a group, and to avoid irreversible decisions.

See: System justification, Reactance, Reverse psychology, Decoy effect, Social comparison bias, Status quo bias. - We favor options that appear simple or that have more complete information over more complex or ambiguous options (even if the complicated option is more important).

See: Ambiguity bias, Information bias, Belief bias, Rhyme as reason effect, Bike-shedding effect, Law of Triviality, Conjunction fallacy, Occam’s razor, Less-is-better effect.

Problem 4: What Should We Remember?

With so much information presented to us all the time and a limited amount of memory to store it all, even after we reduce the data stream, fill in the gaps, and act on it, we must finally choose what is important for us to remember and what we can safely forget.

- We edit and reinforce some memories after the fact, sometimes changing or adding details that weren’t there before.

See: Misattribution of memory, Source confusion, Cryptomnesia, False memory, Suggestibility, Spacing effect. - We discard specifics to form generalities.

See: Implicit associations, Implicit stereotypes, Stereotypical bias, Prejudice, Negativity bias, Fading affect bias. - We reduce events and lists to their key elements, picking out a few items to represent the whole.

See: Peak–end rule, Leveling and sharpening, Misinformation effect, Duration neglect, Serial recall effect, List-length effect, Modality effect, Memory inhibition, Part-list cueing effect, Primacy effect, Recency effect, Serial position effect, Suffix effect. - We store memories differently based on how they were experienced, and not necessarily based on the information’s value to us.

See: Levels of processing effect, Testing effect, Absent-mindedness, Next-in-line effect, Tip of the tongue phenomenon, Google effect.

How to Combat the Negative Effects of Heuristics and Cognitive Biases

We are faced with too much information, so we filter out unimportant things. Once we have reduced the stream of information to only what is the most useful to us, we create meaning by filling in the gaps of what we don’t know. Then we need to act fast, so we jump to conclusions. Finally, once all is said and done, we try to remember only the most important and useful bits.

Remember, heuristics aren’t inherently bad. They are actually quite useful to us 95% of the time because they solve those four problems mentioned above – information overload, lack of meaning, the need to act fast, and the decision about what to remember. Without them, we wouldn’t be able to effectively move through life.

But you need to be aware of the downsides that these heuristics and cognitive biases can cause the other 5% of the time.

You need to detach yourself from whatever situation you’re in and be aware of how your mind is thinking – not just what it is thinking. This is especially important when you’re investing.

So, keep in mind at all times that the four problems that heuristics solve create the following secondary problems:

- We don’t always see everything. Just like a sieve lets some precious stones fall through the cracks, sometimes the information we filter out is actually useful and important to us.

- Sometimes the conclusions our minds immediately jump to don’t reflect what’s actually true. Just like an optical illusion can cause us to see shapes, lines, and colors that aren’t actually there, our heuristics and biases can result in cognitive illusions by causing us to construct stories and imagine details that aren’t actually true.

- Quick decisions can be the wrong decisions. We all have gut instincts and things we feel are true. If these instincts are misinformed because of a cognitive bias, then our actions can be the wrong actions.

- Most people don’t have photographic memories. Our memories are partly based on reality and party based on the action of remembering itself. These memories then inform the cognitive biases in the other 3 groups above, creating a self-reinforcing cycle.

You don’t need to memorize every single heuristic or cognitive bias.

But you do need to be aware of them, which means you need to be familiar with them and at least know that they exist. You can become more familiar with them by reviewing the list of cognitive biases above. Keeping them organized based on the four problems Buster Benson identified should certainly help as well.

Or you can check out the Cognitive Bias Codex graphic below. If you want, print it out and hang it up somewhere where you’ll see it all the time… or even set it as your desktop wallpaper!

via Better Humans

(click image to enlarge)

If you want to learn more about heuristics and cognitive biases, check out 6 Cognitive Biases, Heuristics, and Illusions That Daniel Kahneman Thinks Investors Should Know and Nine Cognitive Biases You Need to Understand to Master Your Money.

Or even better yet, read through the 3 books listed below.

Thinking, Fast and Slow

BY DANIEL KAHNEMAN

Engaging the reader in a lively conversation about how we think, Kahneman reveals where we can and cannot trust our intuitions and how we can tap into the benefits of slow thinking. He offers practical and enlightening insights into how choices are made in both our business and our personal lives―and how we can use different techniques to guard against the mental glitches that often get us into trouble. Winner of the National Academy of Sciences Best Book Award and the Los Angeles Times Book Prize and selected by The New York Times Book Review as one of the ten best books of 2011, Thinking, Fast and Slow is a classic.

The Undoing Project: A Friendship That Changed Our Minds

BY MICHAEL LEWIS

How a Nobel Prize–winning theory of the mind altered our perception of reality.

Forty years ago, Israeli psychologists Daniel Kahneman and Amos Tversky wrote a series of breathtakingly original studies undoing our assumptions about the decision-making process. Their papers showed the ways in which the human mind erred, systematically, when forced to make judgments in uncertain situations. Their work created the field of behavioral economics, revolutionized Big Data studies, advanced evidence-based medicine, led to a new approach to government regulation, and made much of Michael Lewis’s own work possible. Kahneman and Tversky are more responsible than anybody for the powerful trend to mistrust human intuition and defer to algorithms.

This story about the workings of the human mind is explored through the personalities of two fascinating individuals so fundamentally different from each other that they seem unlikely friends or colleagues. In the process they may well have changed, for good, mankind’s view of its own mind.

The Intelligent Investor: The Classic Text on Value Investing

BY BENJAMIN GRAHAM

The greatest investment advisor of the twentieth century, Benjamin Graham taught and inspired people worldwide. Graham’s philosophy of “value investing” — which shields investors from substantial error and teaches them to develop long-term strategies — has made The Intelligent Investor the stock market bible ever since its original publication in 1949.