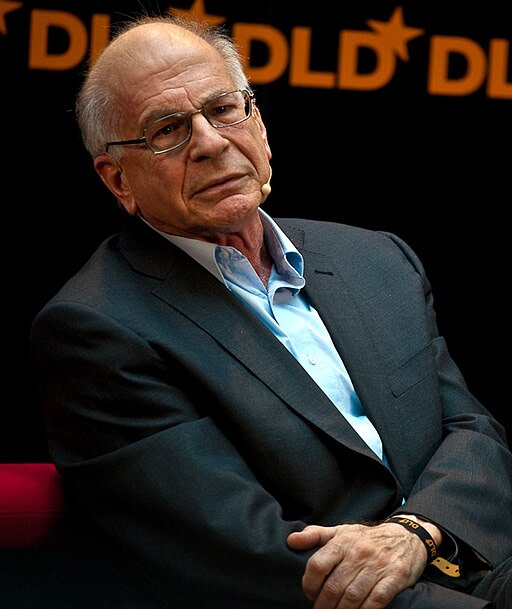

Daniel Kahneman is a professor of behavioral & cognitive psychology at Princeton, winner of the 2002 Nobel Prize for economics, and author of the best-selling book on cognitive biases and heuristics: Thinking Fast & Slow.

Heuristics are mental shortcuts that we use to solve problems and reach judgments.

In the interview “Masters in Business”, Daniel Kahneman sat down with Barry Ritholz and discussed his first steps in heuristics, while also giving a unique breakdown of the cognitive biases and what investors should know.

Key Takeaways

- Attribute Substitution – we tend to simplify complex judgments with simpler but related heuristics.

- Availability Heuristic – We often don’t wait for additional details, so mental shortcuts play a huge role in forming opinions.

- Anchoring Bias – how we view the first piece of information influences subsequent decisions and options.

- Loss Aversion – we tend to prefer avoiding losses over acquiring gains, which affects our decision-making abilities.

- Narrow Framing – preferring multiple viewpoints as opposed to just one showcases how our narrow views affect the risks we take.

Here are the cognitive biases that Kahneman has identified:

1. Attribute Substitution

📖 Definition: Attribute substitution occurs when an individual has to make a judgment (of a target attribute) that is computationally complex and instead substitutes a more easily calculated heuristic attribute.

“You ask someone a complicated question, like: What is the probability of an event? And they can’t answer it because it’s very difficult. But there are easier questions that are related to that one that they can answer. Such as: Is this a surprising event? That is something that people know right away. Is it a typical result of that kind of mechanism? And people can answer that right away.

So what happens is people take the answer to the easy question, they use it to answer the difficult question, and they think they have answered the difficult question. But in fact, they haven’t – they’ve answered an easier one.

I call it attribute substitution – to substitute one question for another. So if I ask you: How happy are you these days? Now you know your mood right now – so you’re very likely to tell me your mood right now and think that you’ve answered the more general question of ‘How happy are you these days?’”

2. Availability Heuristic (aka “What You See Is All There Is” or WYSIATI)

📖 Definition: The availability heuristic is a mental shortcut that relies on immediate examples that come to a given person’s mind when evaluating a specific topic, concept, method, or decision.

“People are really not aware of information that they don’t have. The idea is that you take whatever information you have, and you make the best story possible out of that information. And the information you don’t have – you don’t feel that it’s necessary.

I have an example that I think brings that out: I tell you about a national leader and that she is intelligent and firm. Now, do you have an impression already of whether she’s a good leader or a bad leader? You certainly do. She’s a good leader. But the third word that I was about to say is “corrupt.

The point is that you don’t wait for information that you didn’t have. You formed an impression as we were going from the information that you did have. And this is “What You See Is All There Is” (WYSIATI).”

3. Anchoring Bias

📖 Definition: Anchoring describes the common human tendency to rely too heavily on the first piece of information offered (the “anchor”) when making decisions.

“I’ll give you an example. In the example of negotiation, many people think that you have an advantage if you go second. But actually, the advantage is going first. And the reason is in something about the way the mind works. The mind tries to make sense out of whatever you put before it. So this built-in tendency that we have of trying to make sense of everything that we encounter, that is a mechanism for anchoring.”

4. Loss Aversion

📖 Definition: Loss aversion refers to people’s tendency to prefer avoiding losses to acquiring equivalent gains: it is worse to lose one’s jacket than to find one. Some studies have suggested that losses are twice as powerful, psychologically, as gains.

“Losses loom larger than gains. And we have a pretty good idea of by how much they loom larger than gains, and it’s by about 2-to-1.

An example is: I’ll offer you a gamble on the toss of a coin. If it shows tails, you lose $100. And if it shows heads, you win X. What would X have to be for that gamble to become really attractive to you? Most people – and this has been well established – demand more than $200… Meaning it takes $200 of potential gain to compensate for $100 of potential loss when the chances of the two are equal. So that’s loss aversion. It turns out that loss aversion has enormous consequences.

What is it about losses that makes them so much more painful than gains are pleasurable? In other words, why does this 2-to-1 loss aversion even exist?

“This is evolutionary. You would imagine in evolution that, threats are more important than opportunities. And so it’s a very general phenomenon that bad things sort of preempt or are stronger than good things in our experience. So loss aversion is a special case of something much broader.”

So there’s always another opportunity coming along, another game, another deer coming by but an actual genuine loss – hey, that’s permanent, and you don’t recover from that.

“That’s right. Anyway, you take it more seriously. So if there is a deer in your sights and a lion, you are going to be busy about the lion and not the deer.”

That leads to the obvious question: what can investors do to protect themselves against this hard-wired loss aversion?

“There are several things they can do. One is not to look at their results – not to look too often at how well they’re doing.”

And today, you can look tick-by-tick, minute-by-minute, it’s the worst thing that could happen.

“It’s a very, very bad idea to look too often. When you look very often, you are tempted to make changes, and where individual investors lose money is when they make changes in their allocation. Virtually on average, whenever an investor makes a move, it’s likely to lose money. Because there are professionals on the other side betting against the kind of moves that individual investors make.”

5. Narrow Framing

📖 Definition: Framing refers to the context in which a decision is made or the context in which a decision is placed in order to influence that decision.

“If I ask a regular person in the street would you take a gamble that if you lose, you lose $100, and if you win, you win $180 on the toss of a coin… Most people don’t like it… Now when you ask the same people in the street, okay, you don’t want this one [coin toss], would you take ten [coin tosses]?

So we’ll toss ten coins, and every time if you lose, you lose $100, and if you win, you win $180, everybody wants the ten – nobody wants the one. In the repeated play, when the game is repeated, then people become much closer to risk neutral and they see the advantage of gambling.

One question that I ask people when I tell them about that – so you’ve turned down $180, but you would accept ten of those – are you on your deathbed? That’s the question I ask. Is that the last decision you’re going to make? And clearly, there are going to be more opportunities to gamble, perhaps not exactly the same gamble, but there’ll be many more opportunities.

You need to have a policy for how you deal with risks and then make your individual decisions in terms of a broader policy. Then you’ll be much closer to rationality.

It’s very closely related to What You See Is All There Is. We tend to see decisions in isolation. We don’t see the decision about whether I take this gamble as one of many similar decisions that I’m going to make in the future.

So are people overly outcome-focused to the detriment of the process?

What they are, we call that “narrow framing.” They view the situation narrowly. And that is true in all domains. So, for example, we say that people are myopic – that they have a narrow time horizon. To be more rational, you want to look further in time, and then you’ll make better decisions.

If you’re thinking of where you will be a long time from now, it’s completely different from thinking about how will I feel tomorrow if I make this bet and I lose.”

6. Theory-Induced Blindness / Hindsight Bias

📖 Definitions:

Theory-induced blindness: Once you have accepted a theory, it is extraordinarily difficult to notice its flaws.

Hindsight bias is the inclination, after an event has occurred, to see the event as having been predictable, despite there having been little or no objective basis for predicting it.

Let’s talk about being wrong, and being able to admit that you’re wrong. John Kenneth Galbraith once famously said, “Faced with the choice between changing one’s mind and proving that there is no need to do so, almost everyone gets busy on the proof. You called this “theory-induced blindness.” So why are we so unwilling to admit when we’re wrong?

“You know you try to make the best story possible. And the best story possible includes quite frequently, “Actually, I didn’t make that mistake.” You know, so something occurred – and in fact, I did not anticipate it – but in retrospect, I did anticipate it. This is called hindsight.

And one of the main reasons that we don’t admit that we’re wrong is that whatever happens, we have a story, we can make a story, we can make sense of it, we think we understand it, and when we think we understand it we alter our image of what we thought earlier.

I’ll give you a kind of example: So you have two teams that are about to play football. And the two teams are about evenly balanced. Then one of them completely crushes the other. Now after you have just seen that, they’re not equally strong. You perceive one of them as much stronger than the other and that perception gives you the sense that this must have been visible in advance, that one of them was much stronger than the other.

So hindsight is a big deal. It allows us to keep a coherent view of the world, it blinds us to surprises, it prevents us from learning the right thing, it allows us to learn the wrong thing – that is whenever we’re surprised by something, even if we do admit that we’ve made a mistake or [you say] “I’ll never make that mistake again”- but in fact what you should learn when you make a mistake because you did not anticipate something is that the world is difficult to anticipate. That’s the correct lesson to learn from surprises. That the world is surprising.

It’s not that my prediction is wrong. It’s that predicting, in general, is almost impossible.”

Summary

There are definitely a lot of parallels between Daniel Kahneman’s research and Warren Buffett‘s and Charlie Munger‘s investment philosophy, such as:

- Don’t be too active

- Make your decisions with a long-term perspective

- Admit your mistakes

- Don’t try to predict what’s unpredictable

- Strive to become as rational as possible

What to Read and Listen to Next?

If you want to learn more about cognitive biases, heuristics, and illusions, then be sure to check out Daniel Kahneman’s awesome book Thinking Fast and Slow.

Finally, if you want to listen to the entire interview between Daniel Kahneman and Barry Ritholz, click to listen below or check out Ritholz’s Masters In Business podcast. Ritholz usually gets some really awesome guests from the world of finance on his podcast and the discussions are almost always incredibly interesting.

The Ultimate Guide to Value Investing

Do you want to know how to invest like the value investing legend Warren Buffett? All you need is money to invest, a little patience—and this book. Learn more

Thank you very much for this beautiful article. I enjoy reading it. I appreciate your thoughts and ideas. Well done.

Nice post, very interesting. Thanks for sharing. I’ve heard of Kahneman’s book but still haven’t gotten around to reading it. I’ve done a lot of reading on behavioral finance (I have a copy of Munger’s speech on the psychology of human misjudgment by my side at all times) but know I need to get around to this famous book.

It really is a great book! What is Munger’s speech? I’d love to hear it/read it (if I haven’t already) 🙂

I’m sure you’ve come across it, but here is the audio: https://www.youtube.com/watch?v=pqzcCfUglws

Additionally, the transcript put together by Whitney Tilson

if you prefer reading it. The audio is pretty comical as well as

informative! Enjoy.

Transcript: http://www.rbcpa.com/mungerspe…

I’m sure you’ve come across it, but here is the audio: https://www.youtube.com/watch?v=pqzcCfUglws

Additionally, the transcript put together by Whitney Tilson if you prefer reading. The audio is pretty comical as well as informative! Enjoy.

Transcript: http://www.rbcpa.com/mungerspeech_june_95.pdf